Contents

Evaluating Explanation Without Ground Truth in Interpretable Machine Learning

arXiv’19, cite:24, PDF link: https://arxiv.org/pdf/1907.06831.pdf

F Yang, Texas A&M University(美国,得州农工大学)

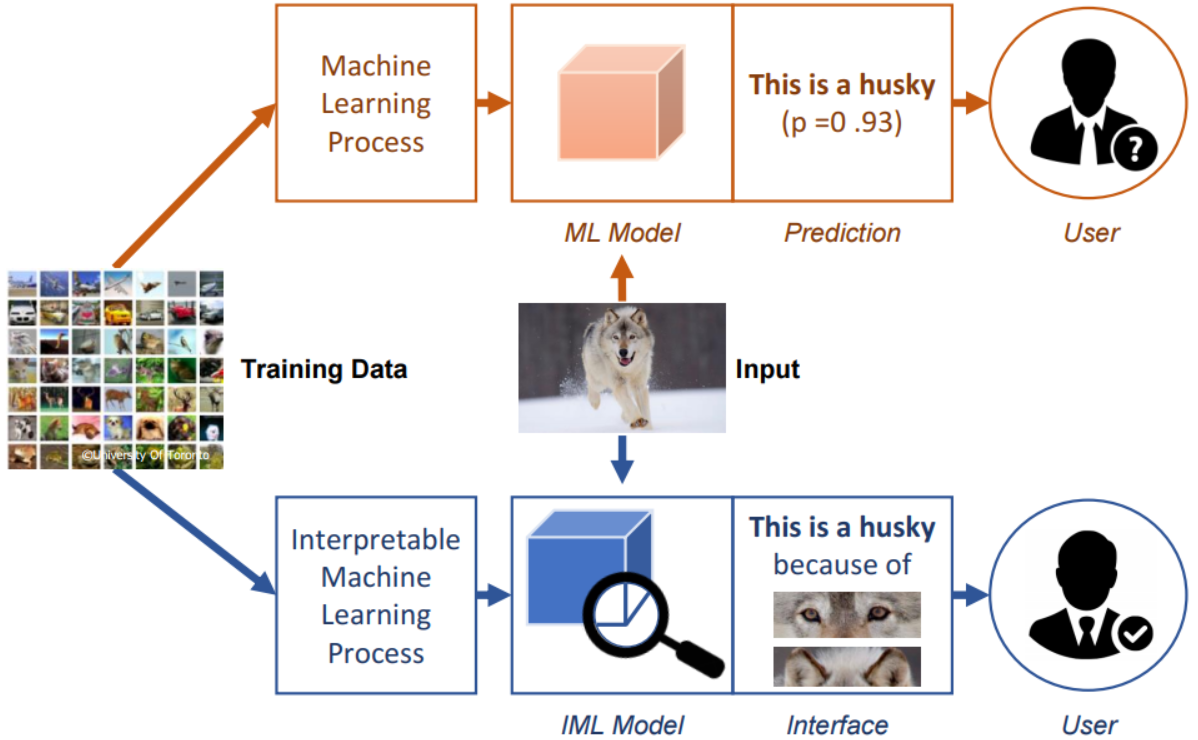

Interpretable Machine Learning (IML)

- Aiming to help humans understand the machine learning decisions.

- IML model is capable of providing specific reasons for particular machine decisions, while ML model may simply provide the prediction results with probability scores.

A two-dimensional categorization

- Scope dimension

- global: the overall working mechanism of models -> interpret structures or parameters

- local: the particular model behavior for individual instance -> analyze specific decisions

- Manner dimension

- intrinsic: achieved by self-interpretable models

- post-hoc (also written as posthoc): requires another independent interpretation model or technique